Sometime in March 2018, the Sri Lankan government blocked access to Facebook, citing the spread of hate speech on the platform and tying it to the incidents of mob violence in Digana, Kandy.

While some lauded the idea, the reality was that Sri Lanka has had a long history of racial violence cultivated by political actors. It has also long since had the powers to arrest instigators of racial violence – the ICCPR Act 56 of 2007 – and did precisely nothing, choosing instead a form of collective punishment for the entire population while shifting the blame away from criticism over lack of police protection for the citizens under attack, something it did again in even more dire circumstances.

Nevertheless, it did something interesting: it brought Facebook to the table.

To set the record straight, the Data, Algorithms and Policy team at LIRNEasia has engaged with Facebook before. Our interest is in using data to inform public policy, and Facebook happens to be one of the greatest data troves on earth. Out of that engagement came, for instance, our findings that the Facebook friend network, at international scales, is tightly linked with bilateral migration and trade for 200 countries [1]. Shortly after the blocks, we had used 60,000 Facebook posts and their posting times to show that the social media blocks were ineffectual, ethically wrong, and possibly compromising the personal security of users who were VPN’ing their way around the government blocks.

To get an idea of how Facebook works: imagine a government spanning 2.3 billion users. Now imagine that said government believes that it is not a government, and instead choses to operate as a many-headed hydra. A good half of the heads you talk to have absolutely no idea what’s going on elsewhere. The other half are moving fast and breaking things. There seem to be a bunch of well-intentioned lawyers going around trying to prevent all these heads from saying something stupid. Meanwhile a bunch of adventurers (mostly journalists and civil society) have broken down the door to the dungeon and are demanding answers and waving rather pen-shaped swords about.

This works, no doubt, for rapid technological innovation. It doesn’t really work for multinational issues of hate speech. Much of the chatter was a nodding of heads and very politely spoken catchphrases that ultimately went nowhere. There was also a lot of discussion on ‘AI’ and how much better they were getting at Sinhala, the most-spoken language in Sri Lanka and the language in which most hate speech manifests.

Seeing that the policy would take time, we instead focused on interrogating the technicalities: if Facebook was so good at AI, what exactly was preventing Facebook from removing hate speech from their platform? Because whatever claims they make about accuracy, hate speech clearly exists in Sinhala and on Sri Lankan Facebook, and not only were they failing at it: they were on occasion going after activists fighting said hate speech.

THE PROBLEM WITH HATRED

Hate speech, for those not in the field, is an extraordinarily complex problem. I prefer to view hate as a beast mutating constantly across four dimension: it varies based on location, time, the in-groups and out-groups in the conversation, and the political context in which it’s said. It’s complex enough that even in English, where it has been studied fairly well, there are multiple definitions for hate speech [2] [3], and the training data available is also biased depending on which definition the creators adopted. It’s a a little like Jacobellis v. Ohio, 378 U.S. 184 (1964), where Justice Potter Stewart wrote on porn: “I shall not today attempt further to define the kinds of material I understand to be embraced within that shorthand description; and perhaps I could never succeed in intelligibly doing so. But I know it when I see it.”

“I know it when I see it” works for human judges. It does not work so when for machine judgement, where even with deep learning a tight definition of the problem is critical to assessing accuracy.

And this is in English. If you’re familiar with ASOIAF, English is a bit like the Night King of languages. It’s decidedly West Germanic, it’s conquered vast swathes of the population, and every enemy it touches becomes a part of its army. Most of the languages in the Global South, including Sinhala, are the thousands of named characters who end up dying grisly deaths; there’s some interesting backstory and pedigree, but at some point either the cold or dragonfire or a random Baratheon will bash your head in. These languages are called resource-poor.

When we began interrogating why Facebook’s AI didn’t work so well in Sinhala, we came to the inevitable conclusion that much of the essential underlying data was simply not available. Not just to Facebook, but to researchers like us as well. Whereas in English it’s child’s play to import a Python library and access basic text corpora and stopwords, these critical links are so much less developed in resource-poor languages[4]. For example, fastText, a library for learning of word embeddings and text classification, is trained on Wikipedia and Common Crawl text; most Sinhala speakers will readily agree that the language therein is the formal variant of Sinhala, a far cry from the colloquial variant (Sinhala is diglossic, exhibits codemixing with English, and there’s evidence of further linguistic drift between colloquial speech and colloquial speech typed out on a smartphone keyboard). To understand hate speech requires detailed study of the actual language people use to sling it about, and we are probably five years from a robust understanding.

We wrote a rather expansive discussion paper [4] on this and a much shorter version for ForeignPolicy. TL;DR: Before we even consider combatting hate, we didn’t even seem to have the basics on hand.

THE GRUNT WORK

Thus began our project to compile an easily available, open-access language dataset of colloquial speech. In this, Facebook came in handy: the entire platform is one giant text repository. We could (and did) simple scrape data, but unfortunately basic ethics and human decency dictate that we should refrain from Cambridge Analytics-style tactics.

Facebook has a platform called CrowdTangle, which was notoriously difficult to get access to if you were a think tank in the Global South; we put forth our request at the tail end of 2018. Eventually, towards the end of 2019, we were given access, and we began compiling corpora. In particular, I’d like to thank Sarah Oh of the Facebook Crisis Response team, who pushed hard to make access happen.

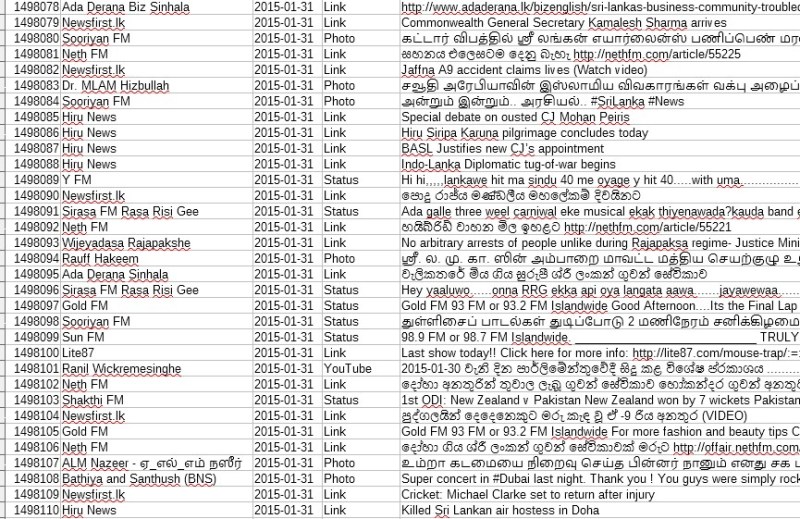

So here is the first of our results: https://github.com/LIRNEasia/FacebookDecadeCorpora

Two language corpora of colloquial Sinhala content extracted from Facebook.

Corpus-Alpha

The larger of the two corpora spans trilingual text posted by 533 Sri Lankan Facebook pages, including politics, media, celebrities, and other categories, from 2010 to 2020. It contains 28,825,820 to 29,549,672 words of text, mostly in Sinhala, English and Tamil (the three main languages used in Sri Lanka). It contains URLs, punctuation and other noise, making it more suitable for discourse analysis and the study of codemixing in colloquial Sinhala.

Corpus-Sinhala-Redux

The smaller corpus amounts to 5,402,76 words of only Sinhala text extracted from Corpus-Alpha. It has been cleaned of URLs, punctuation and noise.

Both corpora have markers for their date of creation, page of origin, and content type. Everything is CC-4.0 – use as you will, even commercially, as long as you give credit and don’t slap on license terms that prevent others from using the data after you.

We’ve also provided stopwords.txt – a list of algorithmically derived stopwords extracted from Corpus-Sinhala-Redux. They differ from classical dictionary-derived stopwords in all sorts of interesting ways, ranging from overwhelming chatter about certain political parties to language quirks in the unicode implementation of Sinhala, and Facebook’s hacks to display certain diacritics.

This isn’t a solution for hate speech. But reality dictates that someone lay the foundations. Hopefully, others – researchers, software developers, academics – can now come in and start building the house. As the novelist Anne Lamott said in her memoirs:

“Thirty years ago my older brother, who was ten years old at the time, was trying to get a report written on birds that he’d had three months to write, which was due the next day. We were out at our family cabin in Bolinas, and he was at the kitchen table close to tears, surrounded by binder paper and pencils and unopened books about birds, immobilized by the hugeness of the task ahead. Then my father sat down beside him put his arm around my brother’s shoulder, and said, “Bird by bird, buddy. Just take it bird by bird.”

[1] Wijeratne, Y., Lokanathan, S., & Samarajiva, R. (2018, March). Countries of a Feather: Analyzing Homophily and Connectivity Between Nations Through Facebook Data. TPRC.

[2] Wijeratne, Y. (2018). The control of hate speech on social media: Lessons from sri lanka. CPR South.

[3] MacAvaney, S., Yao, H. R., Yang, E., Russell, K., Goharian, N., & Frieder, O. (2019). Hate speech detection: Challenges and solutions. PloS one, 14(8), e0221152.

[4] Wijeratne, Y., de Silva, N., & Shanmugarajah, Y. (2019). Natural Language Processing for Government: Problems and Potential. LIRNEASIA.

Comments are closed.